By Jey Purushotham.

Accounting firms are the keepers of tremendous amounts of sensitive client and engagement data. Most firms are exploring the power of leveraging that data with Generative AI, which puts confidential information at risk of being shared with users who are not permissioned to access it – the “oversharing” problem.

For some time the most widely used iteration of natural language processing was ChatGPT, which harnessed publicly available data to generate answers to user prompts. However, with the current iteration of Gen AI, most popularly via Microsoft Copilot, this power is now being applied to a firm’s proprietary data.

Firms’ fears around oversharing are valid, but surmountable.

Accounting firms are typically exploring Copilot by purchasing a small number of licenses, providing those licenses to a select group of professionals at the firm, and then monitoring how those professionals use it. Some of the use cases they’re seeing are expected, like professionals generating meeting summaries and improving PPT presentations. Others are less ‘customary’ activities, like improving corporate research by gathering the variety of names each company might go by or creating a dictionary of commonly used terms within the firm. Firms are also watching to see which license holders are not using their Copilot license and redistributing those licenses as necessary.

Addressing General Data Concerns First

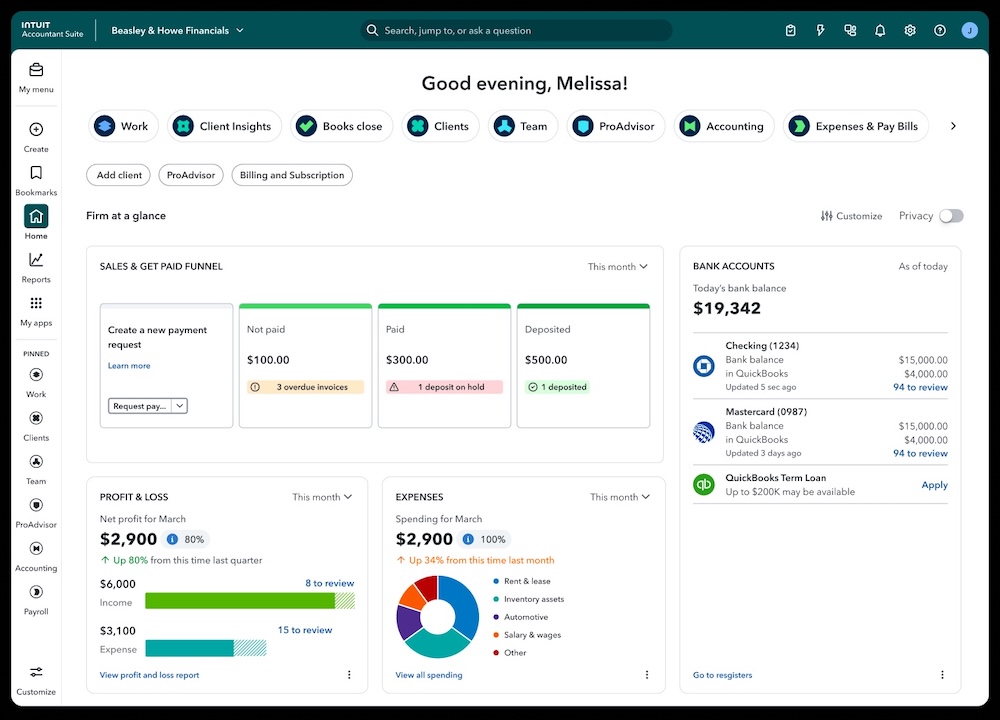

Accountants’ biggest concerns about adopting advanced technology are data quality (46%) and security (38%), according to Intapp’s recent Technology Perceptions Survey. They also want to confirm the investment adds value.

Accuracy is often an issue with generative software, such as ChatGPT, that can super-charge regular search by summarizing everything it finds on the internet, versus a search engine that provides links to web pages for the user to examine. But the information ChatGPT pulls and interprets is uncontrolled and from unknown places. Even more concerning is that these open-knowledge applications also share a firm’s information with the universe, so most accounting firms have guidelines or policies to limit use of non-proprietary AI.

But there are ways to innovate effectively, knowing exactly which AI can be used securely, which applications add value, and how to manage risk that is specific to the accounting industry and its clients.

Data Security Takes Priority

Unlike many industries, the accounting industry is built on a model of clients and engagements. From a business perspective, the most damaging security issues can occur when oversharing of this client/engagement data occurs.

Let’s say Firm ABC is working on a strategic advisory project for Universal Bigness, for which it was obligated to ensure that none of the ABC professionals working with any competitors would view the data pertaining to this particular engagement. How does ABC secure for that, setting up ethical walls and other types of walls across all its systems and users? And how does ABC ensure that the web of walls stays current as its people are frequently shifting onto and off of Universal Bigness competitor engagements?

Accounting firms go through incredible efforts to ensure these permissions are correct and updated. It’s a significant burden to manage, and, despite best efforts, permissions often simply aren’t correct or updated. These unintentional permissions errors could put firms at risk of contract breaches, client departures, reputational damage, and regulatory fines and lawsuits.

Even among the most tech-savvy firms, Risk and IT teams often take months to write and build ethical boundaries for each client engagement within their software’s data structure. This policy creation requires a deep understanding of how engagements are organized within the firm and which systems store these engagements.

Microsoft Copilot is the current de facto AI assistant that can access the company’s full Microsoft tech stack. Copilot can surface content from any document and share that information based on permissions, which are often incorrect or outdated. This creates the potential for oversharing confidential client engagement data to an unauthorized user. And it’s this challenge that has slowed firms’ adoptions of Copilot as the concern about oversharing outweighs the productivity benefits it offers.

Three Steps Before Unleashing AI

Most generic data governance and security software systems don’t address accounting firms’ need to secure data access around their unique client/engagement data structure. Generic security platforms generally do not protect client/engagement information with respect to a firm’s client commitments, industry-specific regulations, or firm policy.

Accounting firms are wisely concerned about implementing tech without prep. But there are three steps the Risk and IT teams can take to protect data while enabling practitioners to leverage the full value of the most innovative AI. These steps help enable a firm to build its own walls to manage client engagement data, whether with in-house technology or using a system with this capability.

- Ensure data across your Microsoft stack is properly structured around the client-engagement model. Check that the permissions for each system are consistent and connected to other Microsoft systems, allowing a user to sign in once, and have consistent access across the Microsoft stack according to each system’s permissions.

- Ensure your firm’s access permissions policies are updated and in line with client contracts and regulatory specifications. Take inventory of all permissions and policies and compare that to a search across all client contracts. Once those permissions and contracts are aligned, confirm that those permissions meet regulatory policies.

- Ensure your permissions across your Microsoft ecosystem are aligned with your firm’s updated data access policies. Importantly, it’s crucial that these permissions are tied to your user systems as well as your practice management systems to ensure the permissions automatically stay up to date when privileges change as people join/leave engagements.

By taking the above steps, accounting firms can unlock the true power of Generative AI––all professionals efficiently sharing knowledge while the firm is protected by a robust security posture.

====

Jey Purushotham is a practice group leader at Intapp.

Thanks for reading CPA Practice Advisor!

Subscribe Already registered? Log In

Need more information? Read the FAQs